A comprehensive tutorial on starting with Terraform

Getting started with Terraform

At Salto, we are enabling modern SW development and DevOps methodologies for the development, testing and deployment of business applications. This is done by writing components and elements using a declarative language we developed in-house called NaCl.

This approach is aligned with Terraform's approach of managing infrastructure using declarative code so, for us, using Terraform internally was a natural choice.

In this post we will take the first step in managing infrastructure using Terraform. The post is built more like a tutorial, with the goal of setting up a Git repo for a small team to be able to use. For the state management, we will use the S3 backend (more on that later).

This post is technical and I assume that you, the reader, are familiar with Git and Github/Gitlab, feel comfortable using CLI, and have basic knowledge in coding and AWS.

Infrastructure as code (IaC)

In a nutshell, infrastructure as code (IaC) is the process of managing and provisioning computer data centers through machine-readable definition files, instead of through physical hardware configuration or interactive configuration tools [wikipedia].

With infrastructure as code, a team of developers and operators is able to track, review, rollback and generally organize infrastructure in the form of definitions files and code blocks.

Combined with with version control system such as Git, infrastructure as code provides a powerful way of managing and proving changes to the infrastructure, while reliably satisfying all compliance requirements - as a team or as individual contributors.

What is Terraform?

Terraform by Hashicorp is an infrastructure as code tool for building, updating and destroying, well, infrastructure.

Terraform configuration files low-level syntax is HCL (Hashicorp Configuration Language), which is a declarative language, and is being used in a few other of Hashicorp's products. This language lets you define complex infrastructure as code, by using arguments and blocks. HCL is more readable and writeable by humans (compared to JSON for example), as we will see in next sections.

This space also has some infrastructure automation tools that help with automating and collaborating infrastructure changes by using Terraform. I will devote one of my next posts to one of these tools, Atlantis.

If you want to take a deeper dive into this subject, then I suggest reading Emily Woods' mini series on IaC and Terraform.

Here is the general flow for this tutorial:

1. Create a user in AWS

2. Prepare the environment

1. Download Terraform

2. Get access and secret

3. Create a new Git repo

3. Configure the Terraform AWS provider

4. Create an S3 bucket and DynamoDB table for state and locking

5. Create some AWS resources using Terraform

6. Update some AWS resources using Terraform

7. Destroy some AWS resources using Terraform

Create a user in AWS

Please create a new AWS account or use an already existing one for this tutorial.

I will not go through the entire process of creating an account, but you can follow this official guide to learn more about it.

Once you have an AWS account, create a new user (in IAM). This can be the terraform user (you can name it terraform).

Then attach the following policies to the user:

- AmazonS3FullAccess

- AmazonDynamoDBFullAccess

- AmazonEC2FullAccess

You can find more details in this guide.

Get the terraform user's Access key ID and Secret access key and keep them reachable, as we will use those next.

Prepare the environment

Install Terraform

First, download Terraform from Terraform's download page . In this tutorial we will use Terraform 0.13.3 on a MacOS. It should be very similar to most Linux distributions in terms of running terraform (the Terraform binary).

Terraform base distribution includes only one binary, terraform (or terraform.exe in Windows).

Install this binary to somewhere in your $PATH. If you're using MacOS and Homebrew, you can install Terraform by running brew install terraform. Some users are OK with using the latest version all the time, some don't like it because there are usually changes between minor versions of Terraform (e.g. 0.11 ⇒ 0.12 ⇒ 0.13) and brew can update Terraform on a regular updating process.

Verify that terraform is working by running terraform version. Here's my output:

$ ./terraform version

Terraform v0.13.3

Install Git

If you don't already have installed in your system, please install it (to do that, you can follow this guide)

Create a new Git repository

Create a testing directory somewhere in your OS. I will create mine in ~/dev/terraform_tutorial.

$ mkdir -p ~/dev/terraform_tutorial

In this directory, run;

$ git init

Initialized empty Git repository in ~/dev/terraform_tutorial/.git/

Currently, we will use this Git repository locally, but you might as well create one in Github, Gitlab, or your favorite VCS cloud provider and then clone it locally.

Configure the Terraform AWS provider

State

In order to track infrastructure definitions metadata for mapping real world infrastructure to the local configuration modules, you need to keep the previous known state of the components that are describing these definitions.

The state is saved by default on the local disk but can also be stored remotely, and, indeed, there are quite a few providers to choose from to store the state remotely. A remote state is a better fit for an infrastructure shared by a few members or a team.

In Terraform, remote state is a feature of backends.

In our example, we will keep a local state file in the `state` directory/module. Note that this is not a best practice, however for the sake of this blog post we will do so. The best practice would be to keep everything remotely in a dedicated remote state store and, in case you want to bootstrap the same remote store, by using Terraform itself (a chicken or the egg dilemma, if you will).

You can either migrate your state on initialization or just use a different type of backend for initializing the entire setup (such as Terraform Cloud, which is free for small teams).

See the official documentation on state in here.

State locking

We want to work on shared configuration definitions, and we have a remote state. However, how can we guarantee that a remote state is not being written by two (or more) different members, potentially overwriting each other's state items?

This can be solved by using state locking (see documentation).

In Terraform, every directory is a module. A hierarchy of modules can be represented by a hierarchy of directories.

Note that in this tutorial we're using the term module in order to be consistent with Terraform's technical terms. However, it is not an introduction to modules and we won't explain how to use them in this post.

Every module includes input variables (to accept values from a caller module), output variables (to return results to a caller module) and resources (the blocks that define infrastructure objects).

It is recommended that every module will include at least:

- main.tf - for the main module configuration - resources.

- variables.tf - for the module input variables definitions.

- outputs.tf - for the module output variables definitions.

A main.tf file will typically hold some blocks. There is a terraform {...} block, which holds configuration for Terraform itself, some provider configuration blocks and some resources.

For example:

State module

In our local git repository, let's create a new directory/module and name it state:

$ mkdir state

In this directory/module we will create our bootstrap infrastructure as code, in order to work on an infrastructure as a team.

Finally, we reached a state where we can write our first module!

This module will have a local state file and will be the only part that is not kept on a remote state. Note that after bootstrapping the state and locking mechanism by using Terraform, we can either import the resources or use some other tactic in order to manage the bootstrapping configuration items to the remote state.

Personally, I like to keep this part in a Git file state.

Creating S3 bucket for state and DynamoDB table for state lock

This can be done by writing a state module. We will keep this module's state file in the Git repository.

cd to the state directory. There, using your favorite text editor, create and edit two files: main.tf and outputs.tf.

main.tf: create the required resources - S3 bucket to save the remote state and the DynamoDB table to use as state lock:

outputs.tf:

When using Terraform, we first need to `init` the module. This step is downloading all modules (3rd party and provider modules);

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v3.7.0...

- Installed hashicorp/aws v3.7.0 (signed by HashiCorp)

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, we recommend adding version constraints in a required_providers block

in your configuration, with the constraint strings suggested below.

* hashicorp/aws: version = "~> 3.7.0"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running terraform plan to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Per Terraform's recommendation, let's add the version constraint and change the provider block in main.tf to be:

Now we want to configure authentication for the AWS provider. There is thorough documentation on how to do that, including a few alternatives. We will use the environment variables method;

$ export AWS_ACCESS_KEY_ID=<your access="" key=""></your>

$ export AWS_SECRET_ACCESS_KEY=<your secret="" access="" key=""></your>

Next, we will plan the changes that we wrote above. It is recommended to plan the changes before applying them every time we're changing infrastructure definitions.

$ terraform plan -out plan.out

Go over the output of the plan and verify that the output matches the changes you would expect to see in the infrastructure.

Finally, let's apply the plan from the previous stage:

$ terraform apply plan.out

...

Outputs:

bucket = terraform-tfstate-demo

lock_table = terraform-tfstate-demo-lock

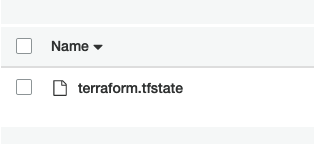

Notice that within the state module directory a new file was created. This is the terraform.tfstate file and it is our local state.

Let's git commit everything (except for the .terraform dir in the state module).

- Here is a link to the S3 backend documentation

- Note that DynamoDB is also used for consistency checking

Create some AWS resources using Terraform

Now that we have the bucket to store the state and the DynamoDB table to lock the state, we can setup our main module's remote state and start creating resources.

For our example, we will create one main module and it will be located in the root of the Git repository.

Edit a new file, state.tf, with the following content:

This is the file that configures the remote state for our root module.

This module is going to be a simple one. We will create a single EC2 instance. To do this we will edit three more files:

main.tf (taken from here):

variables.tf:

outputs.tf

To setup the variable instance_type, we will use the environment variable TF_VAR_instance_type.

If we're using other modules, then we can setup variables inside the module block - but that's for another post, for another time.

Run terraform init again and then we'll setup said environment variable and run plan:

$ export TF_VAR_instance_type=t3.micro

$ terrafom plan -out=plan.out

Next, verify again that the output matches your planned changes. Once you're satisfied, run apply;

$ terraform apply plan.out

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Releasing state lock. This may take a few moments...

Outputs:

public_ip = 3.123.456.78

Notice that the state was locked, changes were applied and the `public_ip` output was printed stdout.

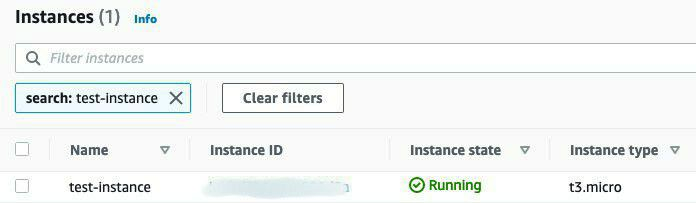

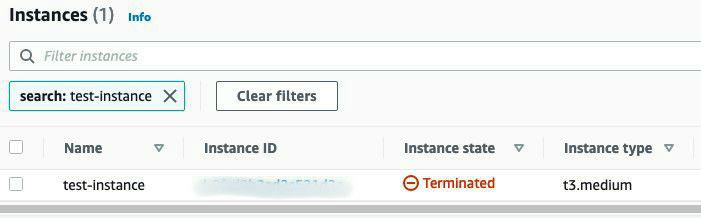

Here's a screenshot of the newly created instance in the AWS console:

Notice also that there isn't any new state file locally, as the state was updated in our S3 bucket! You can verify this by listing the S3 bucket in the console:

Again, git commit all the changes.

Update some AWS resources using Terraform

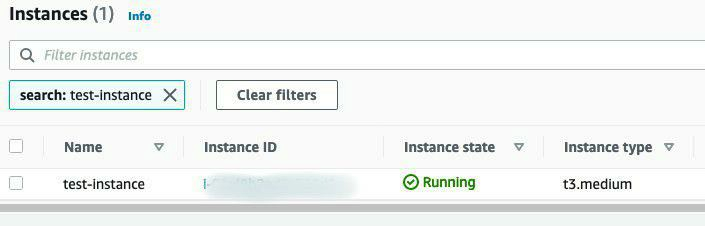

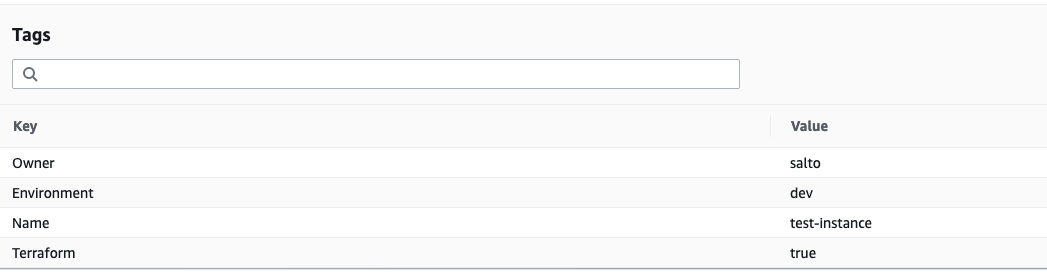

Now, say we want to change the instance type and update the tags on our new instance.

We will simply edit main.tf and add some tags:

We will plan again:

$ export TF_VAR_instance_type=t3.medium

$ terraform plan -out=plan.out

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# aws_instance.this will be updated in-place

~ resource "aws_instance" "this" {

...

}

Plan: 0 to add, 1 to change, 0 to destroy.

------------------------------------------------------------------------

This plan was saved to: plan.out

To perform exactly these actions, run the following command to apply:

terraform apply "plan.out"

Releasing state lock. This may take a few moments...

Followed by apply again:

$ terraform apply plan.out

aws_instance.this: Modifying... [id=i-xxxxxxxxx]

aws_instance.this: Still modifying... [id=i-xxxxxxxxx, 10s elapsed]

...

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Releasing state lock. This may take a few moments...

Outputs:

public_ip = 3.123.456.78

Now, let's validate that those changes were indeed updated, using the AWS console:

Again, git commit all the changes.

The last two commits contain the creation of our EC2 instance and the modification of it.

We have a change trail and a change log between those commits. This is a very powerful concept in terms of infrastructure, for handling service interruptions, auditing for compliance purposes as well as for many other use cases.

We can, for example, revert the last commit and apply again, or have an automated report generated once a week from all of the Git commits.

Destroy the AWS resources using Terraform

In this last part, we will destroy our resources.

Using Terraform you can destroy and create infrastructure as you please, while the definitions are being kept even after the infrastructure is gone;

$ terraform plan -destroy -out plan.out

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# aws_instance.this will be destroyed

- resource "aws_instance" "this" {

...

}

Plan: 0 to add, 0 to change, 1 to destroy.

$ terraform apply plan.out

aws_instance.this: Destroying... [id=i-xxxxxxxxx]

aws_instance.this: Still destroying... [id=i-xxxxxxxxx, 10s elapsed]

...

aws_instance.this: Destruction complete after 51s

Apply complete! Resources: 0 added, 0 changed, 1 destroyed.

Releasing state lock. This may take a few moments...

To conclude this tutorial we will also destroy our remote state module:

$ cd state

$ terraform plan -destroy -out plan.out

- destroy

Terraform will perform the following actions:

...

Plan: 0 to add, 0 to change, 3 to destroy.

Changes to Outputs:

- bucket = "terraform-tfstate-demo" -> null

- lock_table = "terraform-tfstate-demo-lock" -> null

$ terraform apply plan.out

aws_s3_bucket_public_access_block.default: Destroying... [id=terraform-tfstate-demo]

aws_dynamodb_table.tfstate_lock: Destroying... [id=terraform-tfstate-demo-lock]

aws_s3_bucket_public_access_block.default: Destruction complete after 1s

aws_s3_bucket.tfstate: Destroying... [id=terraform-tfstate-demo]

aws_dynamodb_table.tfstate_lock: Destruction complete after 3s

aws_s3_bucket.tfstate: Destruction complete after 2s

Apply complete! Resources: 0 added, 0 changed, 3 destroyed.

Again, git commit all the changes.

Summary

We started with creating a Git repository with a state module to bootstrap our team's Git repository for managing infrastructure using Terraform.

We then moved to create an EC2 resource using Terraform and a remote state, changed the resource and destroyed all the resources.

This example shows you how using Terraform, especially in combination with Git, can have many benefits in managing your infrastructure.

There are many ways of using Terraform and its backends, a whole lot of providers to choose from, and plenty of third party modules to use. There are many things to consider when making your choices - the size of the team, security and compliance policies of the company, cloud providers, as well as others. But that is a topic for a different post.